History of Docker

Early Evolution of Containers

- Now that we understand what containers are, it’ll be helpful to understand how they’ve evolved to put things in perspective.

- The main concept of containers is to provide isolation to multiple processes running on the same host. We can trace back the history of tools offering some level of process isolation to a couple of decades back. The tool chroot, introduced in 1979, made it possible to change the root directory of a process and its children to a new location in the filesystem.

- A few decades later, FreeBSD extended the concept to introduce jails in 2000 with advanced support for process isolation through operating-system-level virtualization. FreeBSD jails offered more explicit isolation with their own network interfaces and IP addresses.

- This was closely followed by Linux-VServer in 2001 with a similar mechanism to partition resources like the file system, network addresses, and memory. The Linux community further came up with OpenVZ in 2005 offering operating-system-level virtualization.

Linux Containers

- Linux Containers, often referred to as LXC, was perhaps the first implementation of a complete container manager.

- It’s operating-system-level virtualization that offers a mechanism to limit and prioritize resources like CPU and memory between multiple applications. Moreover, it allows complete isolation of the application’s process tree, networking, and file systems.

Control Groups (cgroups)

- The LXC that is part of every Linux distribution now was created in 2008 largely based on the efforts from Google.

- Among other kernel features that LXC uses to contain processes and provide isolation, cgroups are a quite important kernel feature for resource limiting.

- The cgroups feature was started by Google under the name process containers way back in 2007 and was merged into the Linux kernel mainline soon after.

- Basically, cgroups provide a unified interface for process isolation in the Linux kernel.

- Let’s have a look at the rules we can define to restrict resource usage of processes:

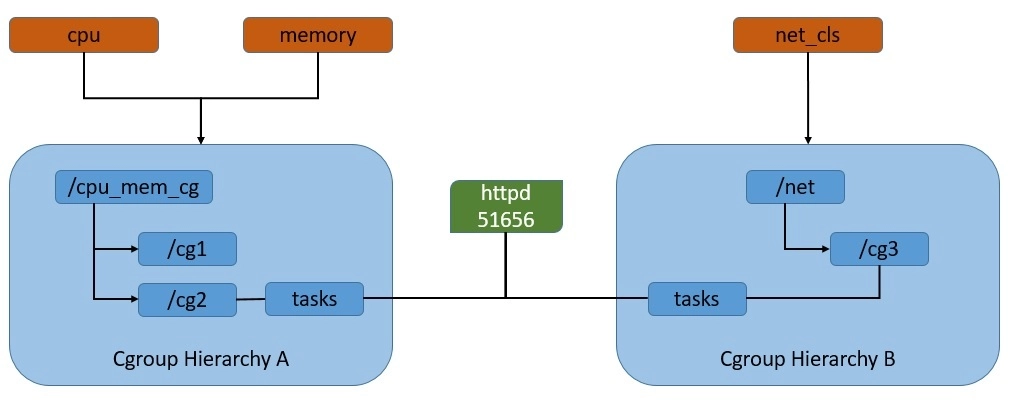

- As we can see here, cgroups work by associating subsystems that represent a single kernel resource like CPU time or memory. They’re organized hierarchically, much like processes in Linux. Hence, child cgroups inherit some of the attributes from their parent. But unlike processes, cgroups exist as multiple separate hierarchies.

- We can attach each hierarchy to one or more subsystems. However, a process can belong to only a single cgroup in a single hierarchy.

Namespaces

- Another Linux kernel feature that is critical for LXC to provide process isolation is namespaces — it allows us to partition kernel resources such that one set of processes is able to see resources that aren’t visible to other processes. These resources include process trees, hostnames, user mounts, and file names, among others.

- There were issues with chroot, and applications in different namespaces could still interfere. Linux namespaces provide more secure isolation for different resources and hence came to be the foundation of the Linux container.

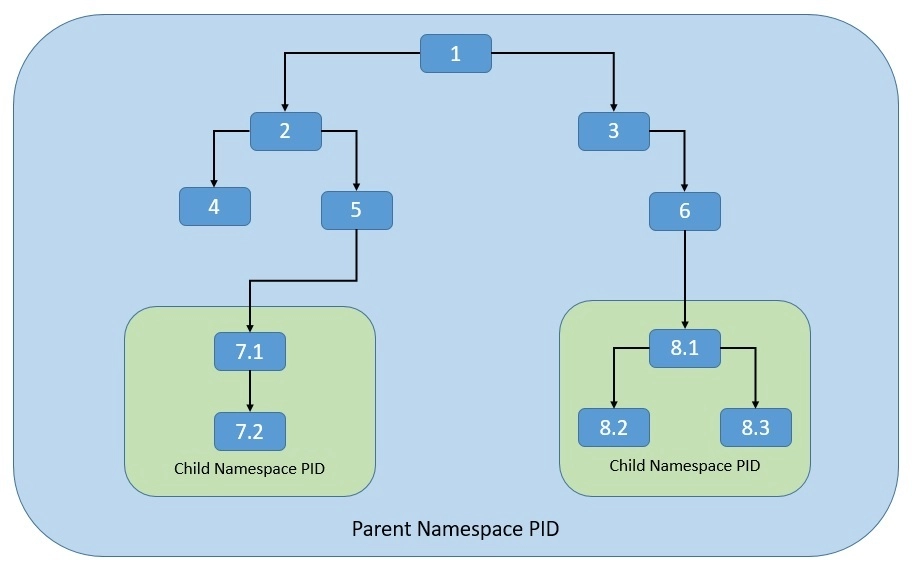

- Let’s see how the process namespace works. As we know, the process model in Linux works as a single hierarchy, with the root process starting during system boot-up. Technically, any process in this hierarchy can inspect other processes — of course, with certain limitations. This is where the process namespace allows us to have multiple nested process trees:

- Here, processes in one process tree remain completely isolated from processes in sibling or parent process trees. However, processes in the parent namespace can still have a complete view of processes in the child namespace.

LXC Architecture

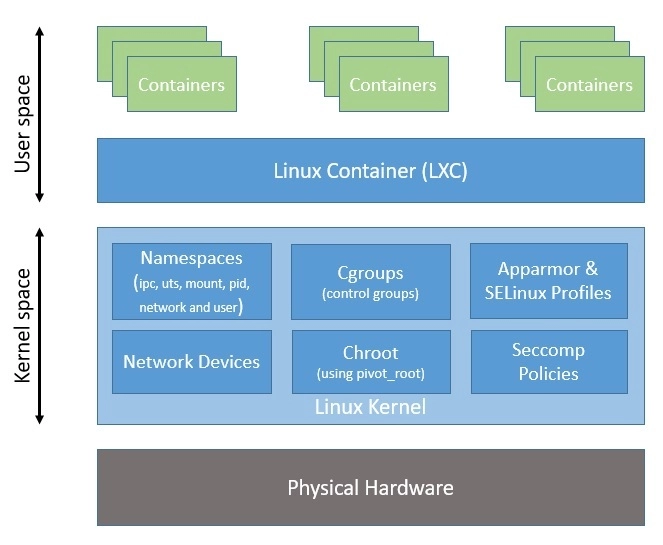

- We’ve seen now that cgroups and namespaces are the foundation of the Linux container.

- Here, as we can see, LXC provides a userspace interface for multiple Linux kernel containment features like namespaces and cgroups. Hence, LXC makes it easier to sandbox processes from one another and control their resource allocation in Linux.

- Please note that all processes share the same kernel space, which makes containers quite lightweight compared to virtual machines.

Arrival of Docker

- Although LXC provides a neat and powerful interface at the userspace level, it’s still not that easy to use and it didn’t generate mass appeal.

- This is where Docker changes the game. While abstracting most of the complexities of dealing with kernel features, it provides a simple format for bundling an application and its dependencies into containers.

- Further, it comes with support for automatically building, versioning, and reusing containers. We’ll discuss some of these later in this section.

Relation to LXC

- The Docker project was started by Solomon Hykes as part of dotCloud, a platform-as-a-service company. It was later released as an open-source project in 2013.

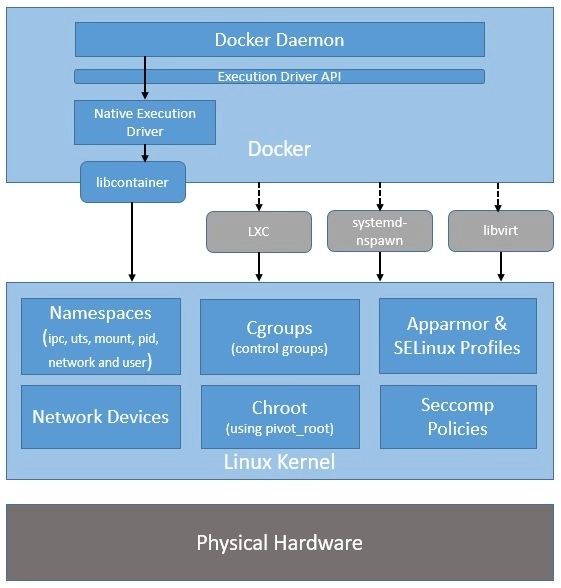

- When it started, Docker used LXC as its default execution environment. However, that was short-lived, and close to a year later, LXC was replaced with an in-house execution environment, libcontainer, written in the Go programming language.

- Please note that while Docker has stopped using LXC as its default execution environment, it’s still compatible with LXC and, in fact, with other isolation tools like libvert and systemd-nspawn. This is possible through the use of an execution driver API, which also enables Docker to run on non-Linux systems:

- The switch to libcontainer allowed Docker to freely manipulate namespaces, cgroups, AppArmor profiles, network interfaces, and firewall rules – all this in a controlled and predictable manner – without depending upon an external package like LXC.

- This insulated Docker from side-effects of different versions and distributions of LXC.

Advantages Compared to LXC

- Basically, both Docker and LXC provide operating-system-level virtualization for process isolation. So, how is Docker better than LXC? Let’s see some of the key advantages of Docker over LXC:

- Application-centricity: LXC focuses on providing containers that behave like light-weight machines. However, Docker is optimized for the deployment of applications. In fact, Docker containers are designed to support a single application, leading to loosely-coupled applications.

- Portability: LXC provides a useful abstraction to leverage kernel features for process sandboxing. However, it does not guarantee the portability of the containers. Docker, on the other hand, defines a simple format for bundling an application and its dependencies into containers that are easily portable.

- Layered-containers: While LXC is largely neutral to file systems, Docker builds containers using read-only layers of file systems. These layers form what we call intermediate images and represent a change over other images. This enables us to re-use any parent image to create more specialized images.

- Please note that Docker is not just an interface to kernel isolation features like LXC, as it comes with several other features that make it powerful as a complete container manager:

- Sharing: Docker comes with a public registry of images known as the Docker Hub. There are thousands of useful, community-contributed images available there that we can use to build our own images.

- Versioning: Docker enables us to version containers and allows us to diff between different versions, commit new versions, and roll-back to a previous version, all with simple commands. Moreover, we can have complete traceability of how a container was assembled and by whom.

- Tool-ecosystem: There’s a growing list of tools that support integration with Docker to extend its capabilities. These include configuration management tools like Chef and Puppet, continuous integration tools like Jenkins and Travis, and many more.

Standardization Efforts

- We’ve seen how the concept of process isolation has evolved from chroot to modern-day Docker. Today, apart from LXC and Docker there are several other alternatives to choose from. Mesos Containerizer from Apache Foundation and rkt from CoreOS are among the popular ones.

- What is essential for interoperability among these container technologies is the standardization of some of the core components. We have already seen how Docker has transformed itself into a modular architecture benefitting from standardizations like containerd and runc.

Container Orchestration and Beyond

- Containers present a convenient way to package an application in a platform-neutral manner and run it anywhere with confidence.

- However, as the number of containers grows, it becomes another challenge to manage them.

- This is where container orchestration technologies like Kubernetes have started to gain popularity. Kubernetes is another graduated CNCF project that was started originally at Google.

- Kubernetes provides automation for deployment, management, and scaling of containers. It supports multiple OCI-compliant container runtimes like Docker or CRI-O through Container Runtime Interface (CRI).

- Another challenge is related to connecting, securing, controlling, and observing a number of applications running in containers. While it’s possible to set up separate tools to address these concerns, it’s definitely not trivial.

- This is where a service mesh like Istio comes into the picture — it’s a dedicated infrastructure layer controlling the way applications share data with one another.

Reference

Proceed with next page in this Book What is OCI